This post was contributed by Dr. Elisha Rosensweig, Head of Data Science at Verbit. Elisha holds a Ph.D. in Computer Science from University of Massachusetts Amherst and a M.Sc. in Computer Science from Tel Aviv University. Prior to his four years at Verbit, he served as Head of Israel Engineering at Chorus.ai and as an R&D Director at Nokia, formerly Alcatel-Lucent. He regularly speaks on panels and is an expert in data and its implications on AI, transcription, captioning and more.

Today, transcription is being used in a variety of scenarios and formats. Whether it’s a court hearing or an online class, many professionals, students and consumers rely on accurate transcription to perform their daily tasks and job roles.

These transcription services are often accompanied by an interesting, yet challenging counterpart – live captions. This service, which is primarily designed to provide HoH (hard of hearing) and deaf students with access to their classes in real-time, faces a considerable challenge.

On the one hand, transcribing takes time – even ultra-fast performing Artificial Intelligence will need to wait for a speaker to finish saying something before it can transcribe what was said. With more context (or longer audio segments), the AI can greatly improve caption accuracy. Yet on the other hand, too long of a delay between the words spoken and the corresponding captions which appear on the screen makes the experience quite frustrating. It creates a confusing disconnect between what is happening on the screen and the captions that are shown.

This apparent tension prompted Verbit’s leadership to ask – are delay and accuracy separate dimensions of the captioning experience? Edward De Bono is known to have said, “perception is real, even if it is not reality.” It turns out that with live captions, accuracy and the perception of accuracy are not the same thing, and delay plays a key role in that perception.

Let’s experiment

To test this, Verbit’s research team provided 60 Verbit employees with two versions of the same video, both shared almost the same caption text. The main distinguishing feature between the two versions was the caption delay: In one, the captions were delayed by a few seconds relative to the video/audio, while in the other the captions were completely synced with the speaker. These were classified as 1) delayed and 2) synchronized (synced) versions.

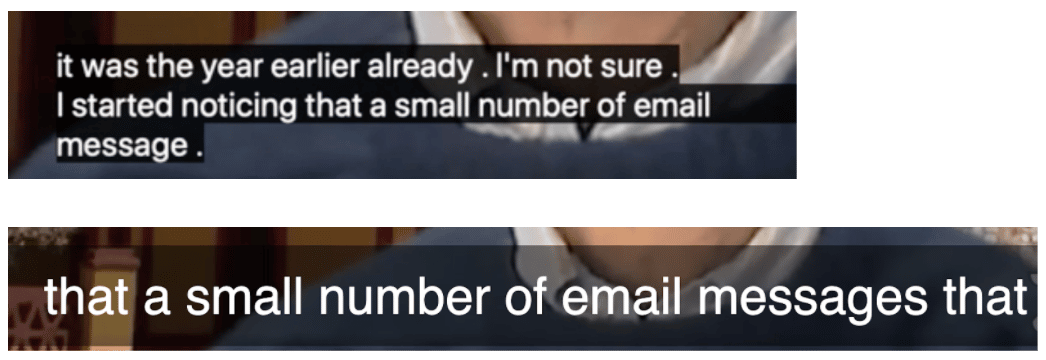

We also modified the visuals of the two sets of captions, so as to not “give away” that the caption text presented was identical. In the delayed version, the captions appeared gradually, line by line, displaying 2-3 lines at a time. In the synced version, only a single line was displayed each time and in a larger font. Sample snapshots of these two versions appear below.

Finally, we asked participants to watch a few minutes of each video and then fill out a survey citing their opinion on the accuracy of the captions. They rated them with a score from 1 (terrible) to 10 (amazing). They were also asked to score their overall experience on the same scale, to help the research team differentiate between the overall experience and their perception of accuracy.

Perception is everything

The findings were dramatic and conclusive: the perception of accuracy was impacted significantly by the delay.

A note about the methodology: Since different people might rank (their intuitive) accuracy differently, this research focused on analyzing the difference in accuracy scores between the delayed and synchronized versions of the captions. Thus, a shift from a “4” to a “6” was treated the same as a shift from a “7” to “9” – in both cases, a 2-point impact is seen.

A review of some of the stats:

As evident, 70% of the participants considered the synced captions more accurate, compared to only ~7% of those who thought the reverse. It’s important to recall that there was actually no significant difference in accuracy between the two texts. The impact of this synchronization was very strong at times – half of those who responded considered it at least 2 points better than the delayed version, and 20% at least 5 points better, out of a total of 10.

Ahem… what about the audio?

It’s important to note that this survey was conducted internally, with mostly hearing individuals. As such, the conclusions from this experiment are suspect until proven in the field with target users, but the research team said there’s good reason to believe that the results here will apply also to the HoH community. Why? It’s likely that the reason the perception of accuracy is impacted by delay (for those without hearing loss) is probably because the brain is having a difficult time aligning the text read with the audio heard. For HoH individuals, who are more likely to be proficient in lip reading, this experience might be similar to trying to align captions with the speakers’ lips.

One way to test this idea on hearing students is to re-run the same experiment we did with a presentation that has slides. In this scenario, the hearing student will also be distracted when there is a disconnect between the text in the slides and what is being captioned.

What’s next?

If delay impacts people’s perception of accuracy, and live captioning requires a delay for strong performance, does that mean that HoH individuals are destined to always experience a sub-par captioning experience?

Thankfully, the answer is – no. Verbit’s team is working diligently to develop the next big thing in live captions. This product will provide high accuracy with practically zero delay in the captioning. When this product launches, Verbit will finally be able to provide deaf and HoH students and consumers with a superb, high-quality experience that will allow them to benefit from captions like never before.

Stay tuned, as more updates from Verbit are coming.

This post was contributed by Dr. Elisha Rosensweig, Shiri Gaber & Keren Kaplan of Verbit’s research team.